Chebyshev center

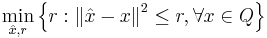

In geometry, the Chebyshev center of a bounded set  having non-empty interior is the center of the minimal-radius ball enclosing the entire set

having non-empty interior is the center of the minimal-radius ball enclosing the entire set  , or, alternatively, the center of largest inscribed ball of

, or, alternatively, the center of largest inscribed ball of  [1] .

[1] .

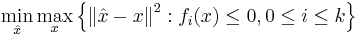

In the field of parameter estimation, the Chebyshev center approach tries to find an estimator  for

for  given the feasibility set

given the feasibility set  , such that

, such that  minimizes the worst possible estimation error for x (e.g. best worst case).

minimizes the worst possible estimation error for x (e.g. best worst case).

Contents |

Mathematical representation

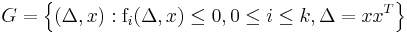

There exist several alternative representations for the Chebyshev center. Consider the set  and denote its Chebyshev center by

and denote its Chebyshev center by  .

.  can be computed by solving:

can be computed by solving:

or alternatively by solving:

- Failed to parse (PNG conversion failed;

check for correct installation of latex, dvips, gs, and convert): \operatorname*{\arg\min}_{\hat{x}} \max_{x \in Q} \left\| x - \hat x \right\|^2. [2]

Some important optimization properties of the Chebyshev Center are:

- The Chebyshev center is unique.

- The Chebyshev center is feasible.

Despite these properties, finding the Chebyshev center may be a hard numerical optimization problem. For example, in the second representation above, the inner maximization is non-convex if the set Q is not convex.

Relaxed Chebyshev center

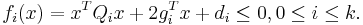

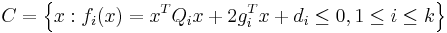

Let us consider the case in which the set  can be represented as the intersection of

can be represented as the intersection of  ellipsoids.

ellipsoids.

with

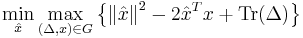

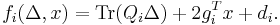

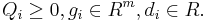

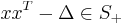

By introducing an additional matrix variable  , we can write the inner maximization problem of the Chebyshev center as:

, we can write the inner maximization problem of the Chebyshev center as:

where  is the trace operator and

is the trace operator and

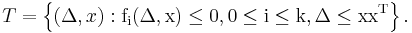

Relaxing our demand on  by demanding

by demanding  , i.e.

, i.e.  where

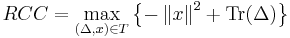

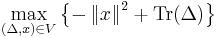

where  is the set of positive semi-definite matrices, and changing the order of the min max to max min (see the references for more details), the optimization problem can be formulated as:

is the set of positive semi-definite matrices, and changing the order of the min max to max min (see the references for more details), the optimization problem can be formulated as:

with

This last convex optimization problem is known as the relaxed Chebyshev center (RCC). The RCC has the following important properties:

- The RCC is an upper bound for the exact Chebyshev center.

- The RCC is unique.

- The RCC is feasible.

Constrained least squares

With a few simple mathematical tricks, it can be shown that the well-known constrained least squares (CLS) problem is a relaxed version of the Chebyshev center.

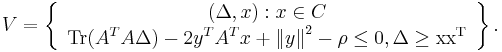

The original CLS problem can be formulated as:

- Failed to parse (PNG conversion failed;

check for correct installation of latex, dvips, gs, and convert): {\hat x}_{CLS} = \operatorname*{\arg\min}_{x \in C} \left\| y - Ax \right\|^2

with

It can be shown that this problem is equivalent to the following optimization problem:

with

One can see that this problem is a relaxation of the Chebyshev center (though different than the RCC described above).

RCC vs. CLS

A solution set  for the RCC is also a solution for the CLS, and thus

for the RCC is also a solution for the CLS, and thus  . This means that the CLS estimate is the solution of a looser relaxation than that of the RCC. Hence the CLS is an upper bound for the RCC, which is an upper bound for the real Chebyshev center.

. This means that the CLS estimate is the solution of a looser relaxation than that of the RCC. Hence the CLS is an upper bound for the RCC, which is an upper bound for the real Chebyshev center.

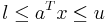

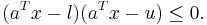

Modeling constraints

Since both the RCC and CLS are based upon relaxation of the real feasibility set  , the form in which

, the form in which  is defined affects its relaxed versions. This of course affects the quality of the RCC and CLS estimators. As a simple example consider the linear box constraints:

is defined affects its relaxed versions. This of course affects the quality of the RCC and CLS estimators. As a simple example consider the linear box constraints:

which can alternatively be written as

It turns out that the first representation results with an upper bound estimator for the second one, hence using it may dramatically decrease the quality of the calculated estimator.

This simple example shows us that great care should be given to the formulation of constraints when relaxation of the feasibility region is used.

See also

References

- ^ Boyd, Stephen P.; Vandenberghe, Lieven (2004). Convex optimization. New York: Cambridge. ISBN 9780521833783.

- ^ Boyd, Stephen P.; Vandenberghe, Lieven (2004) (pdf). Convex Optimization. Cambridge University Press. ISBN 9780521833783. http://www.stanford.edu/~boyd/cvxbook/bv_cvxbook.pdf. Retrieved October 15, 2011.

- Y. C. Eldar, A. Beck, and M. Teboulle, "A Minimax Chebyshev Estimator for Bounded Error Estimation," IEEE Trans. Signal Processing, 56(4): 1388–1397 (2007).

- A. Beck and Y. C. Eldar, "Regularization in Regression with Bounded Noise: A Chebyshev Center Approach," SIAM J. Matrix Anal. Appl. 29 (2): 606–625 (2007).